Who Clicks On Advertisements During an Online Reading Assessment?

An Analysis of ePIRLS 2016 Process Data in the Age of Online Learning

Introduction

Introduction

Just like everyone else in our modern society, students around the world are increasingly able to access and consume online information both in and out of school. This is especially true in 2020 with the rise of online learning intensified worldwide by the pandemic. How well can students read online? How do they stay focused? And how does their online reading literacy compare in a global context? The international assessment ePIRLS, first administered in 2016, offers insights to these questions. This innovative assessment of online reading measures how well 4th-grade students around the world read, interpret, and critique information online. The official findings have been published and the dataset made available, both of which provide policymakers with information essential to developing appropriate public policies in our age of online information.

While online reading provides new opportunities that offline reading does not, it also presents readers with new challenges, one of which is the potential for distractions (Goldstein et al., 2014). Remember that time when you were reading an article online, where half-way through, there’s an advertisement that caught your eye, and 10 minutes later, you finished purchasing whatever that was and completely forgot about the article? The designers of ePIRLS surely remember such moments, so they put advertisements in many webpages from which students are supposed to read and collect information. The hypothesis is that students working on school assignments or research projects will finish sooner if they focus on finding critical information and are not distracted (Mullis et al., 2017).

As a digitally based assessment, ePIRLS collects data on the test-taking process, including whether or not students have clicked on those advertisements. This can provide insights into the relationship between students’ performance and their testing behavior. However, little process data research has been conducted on ePIRLS or on other international large-scale assessments for that matter. In this post, I use ePIRLS data to test the hypothesis above, and to explore further how students around the world read and behave when presented with the distraction of online advertisements.

Data and Methods

Data and Methods

Let’s first understand how the ePIRLS assessment works and where the advertisements appear in the assessment.

The ePIRLS 2016 assessment was completed by 4th-grade students in 16 education systems. (They are called education systems because there are city-level jurisdictions that participated in the assessment as "benchmarking participants.") The assessment consists of five modules of science and social studies topics, with each module lasting up to 40 minutes.

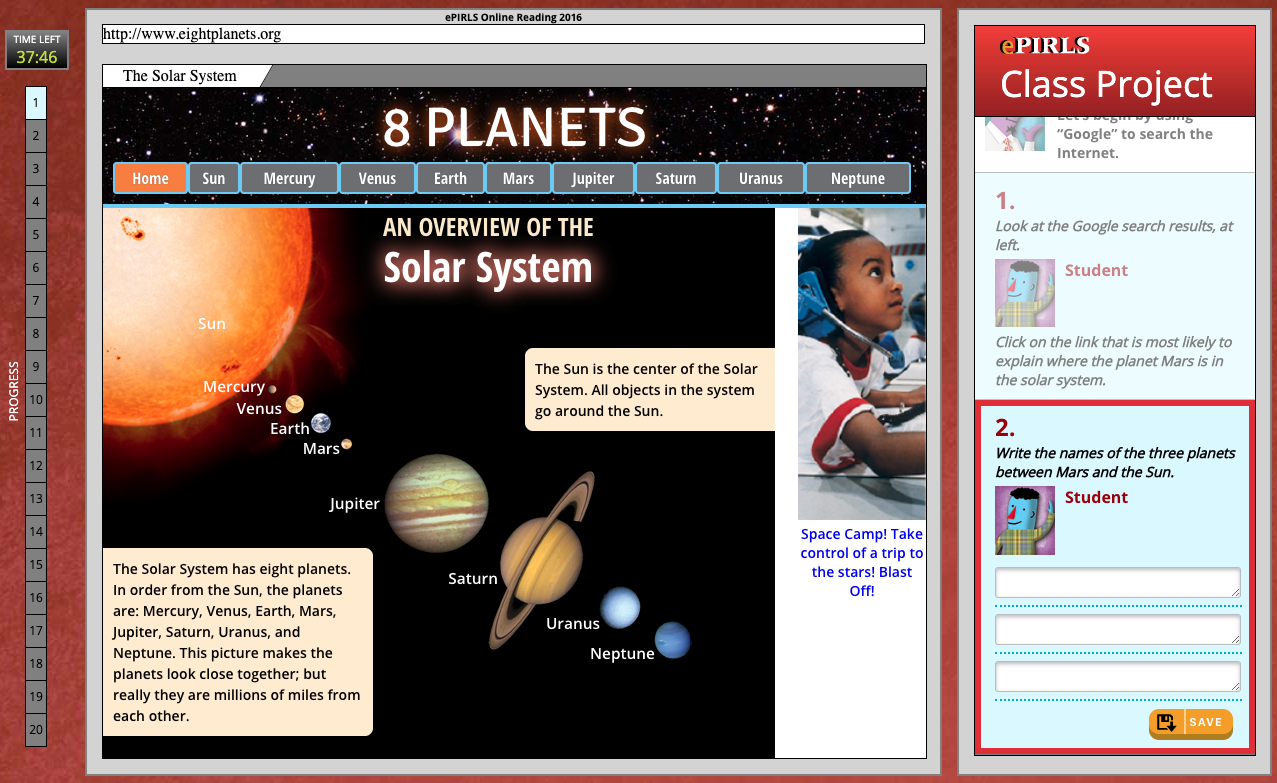

Each participating student took a randomly assigned combination of two of the five modules. Each module was structured as a class project and represented in a simulated internet environment that contains advertisements, such as the ad for space camp in the screenshot from the module "Mars" shown below.

Out of the five modules, "Mars" and "Dr. Elizabeth Blackwell" have been released online, so they can be viewed in the same way as they were presented to participating students. Below is a video walk-through (also available online) about the logistics of the assessment.

ePIRLS recorded information on how many times each student clicked on the advertisements. This information, along with students' online reading performance, is used for my analyses. All analyses follow the official instructions to account for the complex survey design of ePIRLS and use all five plausible values of the online reading achievement scale.

Findings

Findings

Let’s dive into the results, which are categorized into the following four research questions. Click on any question to jump to its section, or scroll down (recommended) to read them all.

- What are students’ advertisement-clicking patterns in each of the five modules?

- What percentage of students clicked on advertisements at least once in the assessment?

- How are students’ advertisement-clicking patterns associated with their online reading achievement?

- How are students’ advertisement-clicking patterns associated with time spent completing the assessment?

Scroll to continue

Scroll to continue

⇓

⇓

⇓